Proving Cantor’s theorem in Lean 4

Tuesday July 23 2024

In this post I walk through proving Cantor’s theorem in Lean 4. Along the way we will encounter some fundamental ideas in dependent type theory, as well as the Lean language.

The intended audience are people new to automated theorem proving. Parts 1-2 will review Cantor’s theorem. Skip to Part 3 if you know the theorem to jump straight into Lean.

If you want to tinker with the code without installing Lean, check out live.lean-lang.org

1. Informal statement of Cantor’s theorem

To begin, recall Cantor’s theorem:

Cantor’s theorem: Let \(S\) be a set, and let \(P(S)\) be the power set of \(S\). Then there is no surjective function from \(S\) to \(P(S)\).

If these words are unfamiliar, no worry, let’s define them:

a set is a collection of elements. We can list the elements of a set using brackets like \(S = \{...\}\). We write \(x \in S\) to mean that \(x\) is an element of \(S\) and \(x \notin S\) otherwise. Sets can be finite or infinite.

the power set is the collection of all subsets. For example if \(S = \{1,2,3\}\) then \(P(S) = \{\{\}, \{1\}, \{2\}, \{3\}, \{1,2\}, \{1,3\}, \{2,3\}, \{1,3,3\}\}\). Notice how we included the empty set \(\{\}\).

a function between sets assigns elements of one set to another, i.e. if \(A\) and \(B\) are sets then \(f:A \to B\) denotes a function from \(A\) to \(B\), so that each element \(a\) in \(A\) is assigned to an element \(f(a)\) in \(B\). \(A\) is called the domain and \(B\) is called the range (or codomain). Functions can only assign each element of the domain to one element of the range. Multiple elements of the domain can be assigned to the same element in the range, and not every element in the range need be assigned to.

a function is surjective if every element of the range is assigned to by some element of the domain. For example if \(A=\{1,2,3\}\) and \(B=\{4,5,6,7\}\) and \(f:A\to B\) is the function defined by \(f(1)=4\), \(f(2)=5\), and \(f(3)=6\), then \(f\) is not surjective because no element is assigned to \(7\). In other words, there does not exist an \(x\) in \(A\) such that \(f(x)=7\). More generally, a function \(f: A \to B\) is surjective if for all \(b \in B\), there exists \(a \in A\) such that \(f(a)=b\).

If \(B\) has more elements than \(A\), then it is impossible for there to be a surjective function \(A \to B\). In fact, this proves Cantor’s theorem whenever \(S\) is finite, because if \(S\) has \(n\) elements, then \(P(S)\) has \(2^n\) elements, and \(n\) is less than \(2^n\). But Cantor’s theorem holds more generally in the case when \(S\) may be be infinite.

As a consequence of Cantor’s theorem, \(P(S)\) is larger than \(S\), i.e. has a larger cardinality. In fact, because \(P(S)\) is also a set, we can apply the power set again and obtain \(P(P(S))\) which will be even larger. Starting with a countably infinite set \(S\), this results in an infinite sequence of cardinalities of increasing size called the Beth numbers.

2. Informal proof of Cantor’s theorem

To prove Cantor’s theorem, let \(S\) be a set, let \(P(S)\) be its power set, and let \(f: S \to P(S)\) be any function. We will show \(f\) cannot be surjective using a proof by contradiction. To do so, assume \(f\) is surjective with the goal of deriving a contradiction.

Because \(f\) is surjective, every subset must be assigned to by some element of \(S\). Consider the following subset:

\[ B = \{ x \in S \mid x \notin f(x) \} \]

Since we assumed \(f\) is surjective, there must exist some \(x^*\) in \(S\) such that \(f(x^*) = B\). But this yields a contradiction. Observe:

if \(x^* \in B\), then \(x^* \notin f(x^*)\), and \(f(x^*)=B\), so \(x^* \notin B\), a contradiction.

if \(x^* \notin B\), then \(x^* \in f(x^*)\), and \(f(x^*)=B\), so \(x^* \in B\), a contradiction.

Both cases result in contradiction, so this negates the original assumption that \(f\) is surjective and completes the proof by contradiction.

3. Formal statement of Cantor’s theorem

Lean is based on dependent type theory where we work (roughly speaking) with types instead of sets. If T is a type, then x : T means x is a term of type T, analogous to x belonging to T as a set.

T1 and T2 are two types, then T1 → T2 is itself a new type, the type of functions from T1 to T2. Let’s first define the property of a function being surjective.

def surjective {A B : Type} (f: A → B) : Prop :=

∀ b : B, ∃ a : A, f a = b

Some remarks:

- Lean definitions takes the form

x : T := exprwherexis the name of the term,Tis its type, andexpris an expression providing the definition. - This code block defines a new term called

surjective. The type of this term is a dependent function type, whose inputs are the typesAandBalong with a functionf: A → Band whose output is the proposition thatfis surjective. {A B : Type}is wrapped in brackets while(f: A → B)is wrapped in parentheses, they are functionally similar but somewhat of a stylistic choice, it says that the typesAandBcan be implicitly inferred fromf, and the result takes a single inputfand returns a true or false proposition.∀means “for alL” and∃means “there exists”, the definition matches the one given earlier in section 1.f a = bis notation forf(a) = b

Exercise: state (but don’t prove) the theorem that the composition of two surjective functions is surjective.

Solution:def composition_of_surjective_is_surjective (A B C: Type) (f: A → B) (g: B → C) (h1: surjective f) (h2: surjective g) : surjective (g ∘ f) :=

sorry

def subset (A: Type) : Type := A → Prop

Can you figure out what is going on? This definition takes a type A as input, and yields a new type, the type of functions from A to Prop (propositions). That’s what a subset is! It is a function that says yes/no on each element, determining whether it belongs to the subset. Thus, subset A is the type of all such functions, that is, the collection of all subsets.

theorem Cantor (A: Type) (f: A → subset A) : ¬ surjective f :=

sorry

¬ is the symbol for negation. The theorem says for any type A, for any function f mapping terms of type A to terms of type subset A, then f is not surjective.

4. Formal proof of Cantor’s theorem

Proofs in Lean take place by a series of tactics. These tactics transform the goal state in sequence until the proof is completed. Normally the intermediate goal states are not visible when reading Lean source code, but I will display them to give a sense how the proof works.

Maybe I should just print the full proof before stepping through? Here it is, with comments marked by--:

theorem Cantor (A : Type) (f : A → subset A) : ¬ surjective f := by

-- Begin proof by contradiction

apply Not.intro

-- Introduce contradictory hypothesis (that f is surjective) as h0

intro h0

-- Define the "bad" subset B

let B : subset A := fun x => ¬ (f x) x

-- Show that x is an element of B if and only if x is not an element of f(x)

have h1 : ∀ x: A, B x ↔ ¬ (f x) x := by

intros

rfl

-- Since f is surjective, let z be the element of A such that f(z) = B

obtain ⟨z, h2⟩ := h0 B

-- From h1, it follows that z is an element of B if and only if z is not an element of f(z)

have h3 : B z ↔ ¬ (f z) z := h1 z

-- But f(z) = B, so this is a contradiction. Hard to explain this part, it just works

rw [h2] at h3

have h4 : B z → False := fun x => h3.1 x x

exact h4 (h3.2 h4)

TODO

Refuse to forfeit impractical thinking

Thursday July 11 2024

I may have failed to complete graduate school, but I refuse to forfeit impractical and abstract thought and be made to think about trivial practical things.

Two things you can’t get back

Thursday July 4 2024

are people and time.

Autoprosecution

Thursday July 4 2024

AI lawyers sound cool for interpreting legal text until they’re deployed en masse as federal prosecutors under a GOP regime.

Goals vs behavior

Wednesday July 3 2024

An “agent” is a system that takes actions in order to achieve a goal, and “intelligence” measures its competence at doing so.

But how does an agent know it has achieved its goal? The goal being achieved is a property of the state of the world, something for which the agent only partially observes.

In reinforcement learning, you can address this using the very sparse reward, +1 for being in the goal state and 0 otherwise. This should encourage the agent to learn a representation of the world it can use to distinguish a goal state from a non-goal state.

But something seems amiss. The agent’s true goal is to receive +1 reward, not for the world to be in the goal state. I guess this is the alignment problem.

Is there a way to make an agent genuinely interested in the true state of the world?

This paper seems relevant: Ontological crises in artificial agents’ value systems by Peter de Blanc (2018). He seems to describe the same problem:

One way to sidestep the problem of ontological crises is to define the agent’s utility function entirely in terms of its percepts, as the set of possible percept-sequences is one aspect of the agent’s ontology that does not change. Marcus Hutter’s universal agent AIXI [1] uses this approach, and always tries to maximize the values in its reward channel. Humans and other animals partially rely on a similar sort of reinforcement learning, but not entirely so.

We find the reinforcement learning approach unsatisfactory. As builders of artificial agents, we care about the changes to the environment that the agent will effect; any reward signal that the agent processes is only a proxy for these external changes. We would like to encode this information directly into the agent’s utility function, rather than in an external system that the agent may seek to manipulate.

Also The lens that sees its flaws by Yudowsky

Project 2025 scary

Tuesday July 2 2024

Scotus just gave presidential immunity. There is a lot of fear of losing democracy going around… AOC has called for some of the justices to be impeached. It seems comparable to the Enabling act of 1933.

It is very frightening for the election to be only a few months away. I don’t have anything to input, just recording my feelings.

What makes full?

Monday July 1 2024

What makes full human life? To have magnamity, shape your surroundings and face the consequences of your actions? Or to be part of a collective? Some people want to put trillions of humans in space but that thought disturbs me.

Existential disturbance

Monday July 1 2024

Sometimes reading people from past makes me feel one and at peace with the whole of humanity. Other times it makes me feel like a helpless worm…

Assume you will survive

Friday June 28 2024

If you are faced with two possible scenarios, one in which you survive and one in which you don’t, you should behave as if you are in the one in which you do.

This is a variation of Pascal’s wager. I can’t think of any good examples.

The mathematical auto-coder🤖⌨️☑️

Sunday June 23 2024

I am dreaming of a system that generates provably-correct code given user specifications. That is, the system takes a specification as input and yields two outputs, a piece of code, and a proof that the code meets specification. In other words, two generation steps, code generation and proof generation.

Some important people:

Book compressing

Thursday June 20 2024

Call me stupid, but when I am reading some old book like Notes from the Underground I find like 25-50% of the text to be unnecessary and compressible. I want an LLM algorithm to compresses old books like this into more accessible less verbose versions. Maybe that would revive and reduce the culture barrier to old ideas. One problem is mistakenly removing important quotes and esoteric references.

Edit: someone already made this and called it “magibook” lol

Nocturnal panic feels

Wednesday June 19 2024

like being stranded in a tiny dinghy in a stormy sea on a black night.

Are corporations not already superintelligent AI?

Tuesday June 18 2024

I don’t really like the term “AI”, partly because it’s been overloaded by generative models like GPTs, but also what even is “artificial” - a non-descendant of LUCA?

I prefer the term intelligent agent or simply “agent”. An agent is an entity that takes actions to achieve goals. Biological life forms appear to be agents, with humans the most intelligent. According to instrumental convergence, agents tend towards self-preservation, resource acquisition, and self-improvement. Therefore, the existence of an agent with superhuman intelligence (aka superintelligence) would pose an existential risk to humanity.

Sounds right- but wait, aren’t companies, corporations, and nations already like this? Why do these entities not already pose existential risk, or do they? Is it because they are composed of human workers/managers/executives and thus depend on their existence? Are they simply not intelligent enough? Or are they not even “agents”?

People have discussed this:

Corporations vs. superintelligences by Eliezer Yudowsky (2016).

Things that are not superintelligences by Scott Alexander

Video essay: Why Not Just: Think of AGI Like a Corporation? by Robert Miles (2018) (click here for transcript).

Reviewing the Literature Relating Artificial Intelligence, Corporations, and Entity Alignment by Peter Scheyer (2018).

How Rogue AIs may Arise by Yoshua Bengio (2023)

Multinational corporations as optimizers: a case for reaching across the aisle on LessWrong (2023)

AGI alignment should solve corporate alignment on LessWrong (2020)

Organisations as an old form of artificial general intelligence by Roland Pihlakas (2018).

AI Has Already Taken Over. It’s Called the Corporation by Jeremy Lent (2017).

Most of the sources listed agree that corporations are intelligent agents, and furthermore they possess general intelligence by merit of being made of humans who have general intelligence. They tend not to believe, however, that corporations are superintellignt.

The problem seems to be their inability to combine the intelligence of their members. The main ways to do this are

- aggregation, where a large number of individuals work on the same task. For example, a board room has everyone come up with an idea, and they select the best idea.

- specialization, where each individual becomes best at a single task. For example, an engineering team consisting of mechanical, computer, and software engineers.

- heirarchy, where teams of individuals work (via above methods) on some task and send the result to their superior. For example, a military chain of command.

Notice all these methods are bottlenecked by human intelligence in some way. Aggregation is limited by the smartest human in the room, and specialization is limited by the smartest human at each task.

Heirarchy is an interesting case, because individuals at higher levels deal with more abstract concepts and are thus enabled to do higher-level reasoning. This is similar in neural networks where later layers deal with higher-level features.

Soldiers deal with the mechanical details of their weapons and vehicles, commanders think in terms of squadrons and battlefield positions, and generals thing in terms of grand strategy. But still, the general is a human and so the grand strategy will be explainable in human terms, something not to be expected of a superintelligent AI.

Robert Miles made this point well:

Corporations are able to combine human intelligences to achieve superhuman throughput, so they can complete large complex tasks faster than individual humans could. But the thing is, a system can’t have lower latency than its slowest component. And corporations are made of humans, so corporations aren’t able to achieve superhuman latency. […] Corporations can get a lot of cognitive work done in a given time, but they’re slow to react. And that’s a big part of what makes corporations relatively controllable: they tend to react so slowly that even governments are sometimes able to move fast enough to deal with them. Software superintelligence, on the other hand, could have superhuman throughput and superhuman latency, which is something we’ve never experienced before in a general intelligence.

Important footage

Thursday June 13 2024

Today I found some colorized footage depicting aftermath of the two main horrors of the second world war (I will link them below). Not light watching, but very emotionally moving. Colorization despite being artificial and often subtely wrong makes the footage much more visceral.

After watching it I felt a weird contrast between what I just watched, arguably some of the most important documented footage in history, and some recent AI generated video tech such as Sora, Kling, Luma, etc. Such a contrast that I felt disgusted with a lot of “generative AI” in general.

Why do we archive data at all? I guess two reasons, to record history and for entertainment. The latter, novels/songs/movies etc. is perhaps also a form of recording by the way of synthesizing and aggregating a lot of various lived experienced, even if the particular thing is fictitious. But generative AI slop doesn’t seem to be recording much of anything. If anything it makes all the real important footage like the aforementioned less trustworthy, which is a terrible outcome. So are the engineers working on generative AI a bunch of useful idiots serving totalitarian dictators or do they think they are doing something good by democratizing freedom of expression?

Video references (warning: graphic)

- “Hiroshima-Nagasaki, August 1945” produced by Erik Barnouw in 1970, original footage recorded by Akira Iwasaki in 1945.

- “Belsen Concentration Camp”, recorded in 1945 and used as evidence in the Nuremberg trials.

- colorized: https://www.youtube.com/watch?v=mgwWq2cp2qM

- original: https://catalog.archives.gov/id/24025

Being stupid

- colorized: https://www.youtube.com/watch?v=mgwWq2cp2qM

- original: https://catalog.archives.gov/id/24025

Wednesday June 12 2024

I find myself pretty stupid.. I am not good at slow deliberate thinking. I’m also not very good at reading, even though there are a lot of books, I am tantalized by their existence. So I’m left to think thoughts people already had and about dumb things like jobs and money.

Existential panic

Wednesday June 12 2024

This post may sound pretentious but it’s about something I find genuinely emotionally distressing. I am currently dealing with panic disorder, which is a very terrible thing, and often the panic comes at certain times of day without any particular reason. It tends to pull my mind towards thoughts of peril and doom and decay, essentially death. These concepts I can handle: we all die, it is a fact of life, and that is fine with me by now; besides I am young and relatively healthy and death is far away.

But there is another type of thought I absolutely cannot reconcile, which I guess is the feeling of existential absurdity. Why am I here? in this form, in this body, on this planet, in this universe? I am a biological life form, generated by evolution - not just that but a human being? And I’m me while I watch fellow humans blow each other apart in war, fellow people I could have just as easily been in (and in some sense maybe am in a parallel life). Why is any of this real? Am I like, god experiencing my own creation? Or I am trapped in hell? What the fuck is any of this?

For those thoughts I don’t have a quick calming answer for myself.

Future thinking

Wednesday May 29 2024

Don’t feel very good about future of humans today. I saw polls about increasing bigotry among youths in Netherlands. It is probably due to social media algorithms. So while the youth becoming increasingly fascist, the planet is warming and basic living conditions deteriorating. It gives me sick feeling in my stomach because I don’t see how humanity can recover except for a long periods of war and struggle that I won’t live to see the end of. It also feels like normal methods of human government like organizations and committees don’t work anymore against global powers and internet algorithms. What nightmare being created.

Idea and being

Tuesday May 28 2024

Was reading about demographics problems in Korea, not enough people having children so they pay you to get reverse vasectomy and trying to start government-run Tinder. Like in America, these stupid ideas are a bandaid fix for real problems: unaffordable housing, childcare, and other shitty living conditions. Then I also read about the feminist 4B movement which says: no sex, no children, no dating, no marriage. Badass!

Anyway, at home, some people close to me going through breakup despite having two childs. Sad for them. They already considered it before, but put it off and had child 2, then finally broke up anyway. I wonder if they made a few different decisions, if child 2 would never exist?

It makes me think, before we are born, we are just an idea in our parents’ (or parent’s) mind. The idea becomes reality and we exist. So there seems to be some equivalence between those two. Humans exert a lot of power over the world, the future is very much deteremined by the ideas in peoples’ minds, which gradually transform into material being.

Bonus reading: the non-identity problem aka the “paradox of future individuals”. Here’s an example: Suppose some company wants to build a dirty polluting factory in some uninhabited region which is sure to attract young workers and families and business, and thus future generations of inhabitants. But some environmentalist complains that those future generations, the children of the workers, will be at risk of cancer due to the polluting factory, so it would be wrong to build it. But without the factory, those children wouldn’t have been born, so how can it be wrong when the alternative is for them to not even exist?

One in a million chance

Tuesday May 14 2024

I’ve been wasting time on Turtle WoW lately and reminded of a classic problem in probability.

If a rare event has a 1-in-100 chance to occur, and you repeat it 100 times, it is guaranteed to happen right?

Not quite. The negative binomial distribution for it gives us the following formula for the chance of success after 100 attempts:

\[ 1 - \left( 1 - \frac{1}{100}\right)^{100} \,=\, 0.6339... \]

In fact, replace a 1-in-100 chance with 1-in-million chance, and the result will be about the same after a million attempts:

\[ 1 - \left( 1 - \frac{1}{1000000}\right)^{1000000} \,=\, 0.6321... \]

What is this magical 63% number? It turns out to be a constant related to Euler’s number given by the following limit:

\[ \lim_{n\to\infty} \left( 1 - \left( 1 - \frac{1}{n}\right)^{n}\right) \quad = \quad 1 - \frac{1}{e} \quad = \quad 0.63212055... \]

Public key encryption: a quicky in diagrams

Thursday May 9 2024

TLDR: I explain public key (asymmetric) encryption using string diagrams. For the standard references, read New directions in cryptography by Diffe and Hellman (1976).

In symmetric key encryption you have 3 types of data:

- \(X\) of plaintext (unencrypted) messages,

- \(Y\) of ciphertext (encypted) messages,

- \(K\) of keys,

and two one-way functions,

- \(e: X \times K \to Y\), the encryption algorithm or cipher,

- \(d: Y \times K \to X\), the decryption algorithm or decipher.

Given a message \(x\) and a key \(k\), one computes the encrypted message \(y = e(x,k)\) and can only recover \(x\) using the same key via \(x = d(y,k)\); that is, they satisfy

\[ d(e(x, k), k) = x \]

In fact, even stronger, a message encrypted with key \(k\) can only be decrypted using \(k\) again, no other key \(k'\) will work:

\[ d(e(x, k), k') = x \quad \iff \quad k = k', \quad \forall k' \]

The symmetric communication protocol goes like this: Alex wants to send a message \(x\) to Blair, and we assume they both know the key \(k\) beforehand. Alex computes the ciphertext \(y = e(x, k)\) and sends it across the channel. Blair receives the ciphertext and recovers the plaintext via \(x = d(y, k)\). Simple! Here is a diagram showing which parties observe which data:

Notice how the communication channel (highlighted in red) only sees the ciphertext \(y\), and not the plaintext \(x\) nor the key \(k\).

On the other hand, asymmetric encryption like RSA and ECC does not assume Alex and Blair share the key \(k\) beforehand. In this scenario, we have four data types:

- \(X\), \(Y\), and \(K\) as before,

- \(P\), a set of public keys

and three one-way functions:

- \(p: K \to P\) which computes a public key from a private key,

- \(e: X \times P \to Y\), a cipher which takes the message and a public key as input and yields a ciphertext,

- \(d: Y \times K \to X\), the decipher which takes the encrypted ciphertext and a private key and yields the decrypted plaintext.

If \(k\) is a private key and \(p=p(k)\) is the corresponding public key, then \[ d(e(x, p(k)), k) = x \] In fact, even stronger as before: \[ d(e(x, p(k)), k') = x \quad \iff \quad k = k', \quad \forall k' \]

The asymmetric communication protocol now goes like this: Alex wants to send a message \(x\) to Blair, but they don’t share a private key. So, Blair takes their private key \(k\) and generates their public key \(p = p(k)\), then sends it across the channel to Alex. Alex then computes \(y = e(x, p)\) and returns the encrypted message back across the channel to Blair, who then recovers the original message via \(x = d(y, k)\).

As before, notice how the channel only sees the ciphertext and Blair’s public key, but not the original message nor Blair’s private key \(k\); furthermore, Alex never sees Blair’s private key either!

Of course, I never described how any such one-way functions with the above properties could be constructed. That’s where all the prime number bullshit comes in!

AI therapy

Monday May 6 2024

Is AI therapy possible? is it useful? is it ethical? stay tuned!

2020s vibes

Thursday May 2 2024

Just had this weird vision before bed. I’m in my parents’ house in late spring and the temperature soars unpredictably past the highest summer high, past the low 100s, and we can feel it thick in the air. But instead of relief the AC breaks and the tap water dries up, and instead of being saved the groceries are emptied and the roads become clogged. So we sluggishly wait for it to end but it doesn’t.

Self-convincing

Wednesday May 1 2024

My birthday is in a few days!

Anyway, I have done a lot of self-convincing that my failing PhD program was actually good for me, because now I can do something practical.

The reality is the job market for that practical something is fucked, and I don’t have the network or background to enter it. Any person giving advice in this situation says to further your education - whoops!

I’ve also realized that any startup or generative AI idea I have will be had by Ivy league graduates with 10x more friends and 100x more influence and potential than me. So, I seem to have condemned myself to Walmart or Uber, jobs for the antisocial autist which I would have been except for my privilege. 🤠🔫⚡🐜🌼

Pointlessness

Monday April 29 2024

Since failing graduate program and probably no longer to enter that career, main emotion is pointlessness and lack of purpose.

What are language models good for?

Sunday April 28 2024

What are language models even good for? TODO

Panic relief

Sunday April 28 2024

Anxiety! Panic! a cruel dictator that often rule and ruin my life. Here are my coping strategies:

Understand and avoid short-term triggers. Mine include (TW) any mention of the heart, heartrate, pulse, or diseases related to the blood or heart.

Understand and avoid medium-term causes. For me this includes more than 1 cup of coffee per day, and skipping SSRI medication.

Recognize panic symptoms. For me are psychosomatic chest pain (random pain spikes, dull chest aches) and shortness of breath and unsatisfying breaths. Your mind can exaggerate pain and only notice pain near your area of concern (e.g. chest). Another one is jaw pain in the TMJ muscle from grinding or clenching, which may occur unconsciously during anxious sleep. Since often occur to me at night, I become acutely aware no urgent care center is open.

Relax muscles and perform breathing exercises. The shortness of breath often comes from unconscious tension in the chest, so relax your chest. A normal unstrained breath should raise and lower the stomach, not the chest. My preferred exercise is 4 seconds each on in/hold/out/hold. Try listening to a youtube video guide or asmr. For nighttime clenching, consider a mouth guard.

In last resort, immediate relief drugs. The main one are alprazolam (Xanax), lorazepam (Ativan), and diazepam (Valium). I have heard of beta blockers but they seem less relevant.

Accept precarity of life and appreciate its absurd beauty. If you can read this, you already made it far: you are human, you are alive and with vision or e-reader and have internet access. Life owes us nothing, and we can’t obsess over every possible misfortune or else lose the human spirit.

Cyberattacks!

Friday April 26 2024

TODO

Grad school illusion

Thursday April 18 2024

Solar eclipse seems to change peoples minds like at the battle of the eclipse. My friend quit his job. So what perspective change did it cause in me?

I start graduate school in Fall 23. Went about awful as possible, turned into agoraphobic wreck, dropped all correspondence and failed it all. So I’m back home living in the attic.

I was in a mindset where research was the only way for my humanity to matter and the only way to contribute to society. This seems noble but is actually self-centered and covertly narcissistic. It allows no failure and attributes no importance to people outside academia.

Simple flow kick system

Wednesday April 10 2024

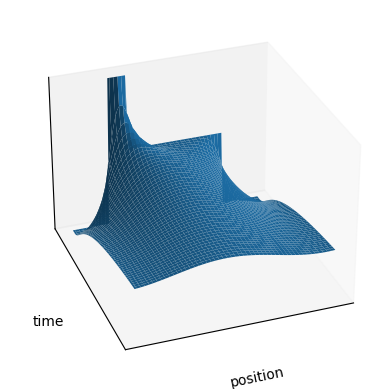

In this post I analyze a toy hybrid dynamical system called a “flow-kick” system, which alternates between continuous-time (flow) and discrete-time (kick) dynamics. Here is an example of a time series plot of such a system which alternates between exponential growth and a constant negative kick from Quantifying resilience to recurrent ecosystem disturbances using flow-kick dynamics (2018):

You could imagine they are wildlife that grow in population naturally at some rate \(r\), and you want to harvest/cull them at some fixed amount \(k\) at a fixed annual frequency such that their population remains stable (the orange line). Another example is like, compound interest with interest rate \(r\) on which you make constant payments of amount \(k\), and you want to eventually pay it off (the red line).

A trajectory \(x(t)\) for this system satisfies:

- \(\frac{d}{dt}x(t) = f(x(t))\) for all \(t \notin \mathbb{Z}\), where \(f(x)=rx\),

- \(g(x^-(t)) = x^+(t)\) for all \(t \in \mathbb{Z}\), where \(g(x)=x+k\).

where \(\mathbb{Z} = \{...,-2,1,0,1,2,...\}\) is the set of integers, the discrete timepoints where the system undergoes a kick, assumed to have unit spacing. In general we could replace \(f\) and \(g\) with some other flow and kick functions respectively. This is almost enough to specify a unique solution, except we haven’t said whether \(x(t)\) should be equal to \(x^+(t)\) or \(x^-(t)\) whenever \(t\) is at a kick point. To resolve this ambiguity, we can choose the former option by adding a third condition,

- \(x(t)\) is right-continuous

Ignoring kicks, the solution to the differential equation above is \(x(t) = x(0) e^{rt}\). Setting \(t=1\), we find \(x(1)=x(0) e^r\). Therefore the flow step really just multiples by the constant \(e^r\). It follows that

\[ x(t+1) = x(t) e^r - k \]

for all \(t \in \mathbb{Z}\). This is a non-homogeneous recurrence relation. If we set \(x^*=x(t+1)=x(t)\), we can find the fixed point

\[ x^* = \frac{k}{e^r-1} \]

This is an unstable fixed point above which the solution grows without bound, and below which the solution goes to negative infinity, or more realistically, to zero, in which case you might instead use the kick function \(g(x) = \min\{x-k,0\}\).

All stories are stories about people

Friday April 5 2024

“All stories are stories about people”.

Remind me to update this post whenever I figure out who came up with this concept first.

Some scifi stories are about automation and the rise of machines, at least on the surface. But they really speak about capitalist human relations between worker and owner.

Similarly stories about disaster are not really about the disaster itself, but about the people you fear to lose.

People are the atoms of our lives, not physical atoms - we had to discover those.

Notes on percolation

Sunday March 31 2024

What is “percolation” on a network? Edit: I change my mind let’s call them graphs not networks. For an overview, we can check Wikipedia. For some more details, we can read Percolation and Random Graphs by Remco van der Hofstad. Let me summarize what I found:

- Percolation on an infinite lattice

The methods in this first section only apply to infinite lattices, which are infinite graphs with some repeating symmetry. The simplest example is the integer lattice \(\mathbb{Z}^d\) of dimension \(d\). When \(d=2\), it is the infinite chessboard, when \(d=3\), it is Minecraft, etc. Every node \(x \in \mathbb{Z}^d\) is connected to \(2d\) neighbors, left/right, up/down, etc… for each dimension.

For a given fixed number \(p \in [0,1]\), the “connection probability”, we imagine all the edges in \(\mathbb{Z}^d\) are “enabled” (independently) with probability \(p\) and “disabled” otherwise. We can now ask, for a given \(p\), what is the probability there is an unbroken path between two given nodes? Whatever it is, it should correlate with \(p\), and be equal to \(p\) in the extreme cases \(p=0\) and \(p=1\).

Given a node \(x \in \mathbb{Z}^d\), let \(C(x)\) be the “cluster” of \(x\), the set of nodes connected to \(x\) by some path. These clusters form a unique partition of space into connected components. When \(p=0\), no edges are enabled, so \(C(x)\) is just \(x\) by itself with probability 1. When \(p=1\), then \(C(x)=\mathbb{Z}^d\), the whole lattice, with probability 1.

Now we ask, what happens in the intermediate cases when \(p \in (0,1)\)? To answer, we can investigate when the clusters are likely to be finite in size, like when \(p=0\), and when they are likely to be infinite in size, like when \(p=1\). An application of Kolmgorov zero-one law says there must exist a number \(p_c \in [0,1]\) called the critical value, or the phase transition point, such that when \(p<p_c\), every cluster is finite with probability 1, and when \(p>p_c\) then every cluster is infinite with probability 1. (In the latter case, there is only 1 infinite cluster, as any infinite cluster must be unique, since they are bound to unite at some point.)

- In case it’s not clear what an “infinite cluster” means, you can also choose some large number \(R>0\) and calculate the probability of a path from the origin in \(\mathbb{Z}^d\) to the edges of the \(d\)-dimensional bounding box \([-R,R]^d\), and take the limit as \(R \to \infty\).

- We say “every cluster” because the lattice is homogeneous, so every node will share the same qualitative properties such as infinite cluster probability.

- In the case that \(p_c=0\) or \(1\) the phase transition is called trivial.

When \(d=2\), Kesten (1982) showed \(p_c=\frac{1}{2}\). In other words if more than 50% of edges are enabled on the infinite chessboard, an unbroken path to infinity is guaranteed… weird! For other values of \(d\), and other types of lattices, it is rare to find the exact value of \(p_c\). Another known case is an \(r\)-regular tree has \(p_c=\frac{1}{1-r}\).

We can sometimes bound the critical value: on \(\mathbb{Z}^d\), if \(d \geq 2\), then \(\frac{1}{2d-1} \leq p_c < 1\), which guarantees it is non-trivial. If the graph is transitive with degree \(r\), then \(\frac{1}{r-1} \leq p_c\).

- Finite graphs

Let \(G\) be a undirected graph and let \(V\) be the set of nodes, with \(|V|=n\). The degree of a node \(x \in X\) is the number of other nodes connected to \(x\). We can define

- the degree sequence \(D_0,D_1,D_2,\cdots\) where \(D_k\) is the number of nodes with degree \(k\). It follows that \(\sum_{k=0}^{\infty}D_k=n\), and also \(D_k\) must be zero eventually.

- the degree distribution \(d_0,d_1,d_2,\cdots\) which is just \(d_i = \frac{D_i}{n}\). It turns the degree sequence into a probability distribution with \(\sum_{k=0}^{\infty} d_k=1\)

A scale-free graph is one that has a power-law degree distribution, \(d_k \sim k^q\) for some exponent \(q\). This is not a precise definition for finite graphs (and hence real world networks), but can be checked in practice by doing linear fit to the loglog equation \(\log(d_k) = a_0 + a_1 \log(k)\).

A graph is clustered if for any nodes \(x,y,z\), an edge between \(x,y\) and an edge between \(x,z\) often imply an edge between \(y,z\), that is, more than would be expected at random. One can define the clustering coefficient \(C\) of a graph based on the preceding principle using a big equation I won’t repeat here. A typical unclustered graph satisfies \(C=C_0=\frac{2|E|}{n(n-1)}\), where \(|E|\) is the number of edges. Thus a graph is considered clustered when \(C\) is much greater than \(C_0\).

A small-world graph is one where the distance between nodes is relatively small, like the Kevin Bacon or Erdos number thing (defined more precisely later).

- Random graph processes

Let \(G_t=\{G_t \mid t =0,1,2,\cdots \}\) be a sequence of graphs, called a graph process.

Given a graph process \(G_t\) let \(d_{k,t}\) be the degree distribution at step \(t\). The process is called sparse if there exists a limiting degree distribution \(d_k\) such that \(\lim_{t\to\infty} d_{k,t}=d_k\).

A sparse graph process \(G_t\) with limiting degree distribution \(d_k\) is called scale-free if there exists some \(\tau\) such that \(\lim_{k\to\infty} \frac{\log(d_k)}{\log(1/k)} = \tau\).

The graph process is called highly clustered if \(\lim_{t\to\infty} C_t > 0\), where \(C_t\) is the clustering coefficient at step \(t\).

The graph process is small-world if, given the measurement \(H_t\) of “average distance” between nodes in \(G_t\) so-defined, then \(\lim_{t\to\infty} \text{Prob} (H_t \leq K \log t) = 1\) for some constant \(K\).

- Some examples

He introduces a class of models calls inhomogeneous random graphs.

- This includes as a special case the well known Erdos-Renyi graph, considered as a graph process where \(t=0,1,2,...\) is the number of nodes and \(p = \frac{\lambda}{t}\), for some fixed number \(\lambda\). The limiting degree distribution of this process is \(d_k = \frac{e^{-\lambda} \lambda^k}{k!}\), a Poisson distribution with parameter \(\lambda\), so it is sparse but not scale-free.

Next introduces the preferential attachment (Barabási–Albert) model, where new nodes are introduced at each step and attach to existing nodes with more edges with higher probability. It is a scale-free graph process with the power law \(d_k \sim k^{-3}\), and hence an interesting case study of the precending theories.

So far we considered percolation on infinite graphs, properties of finite graphs and graph processes. So how do we unify the two, and model percolation on finite random graph processes? Unfortunately, the remaining parts which explain this (Theorem 1.17 in Section 1.3.3 and onward) go above above my head…

Dijkstra’s generalized pigeonhole principle for bags

Friday March 22 2024

Pigeonhole principle is a theorem in mathematics that says, in plain language: if you release a flock of pigeons into a pigeon coop, and every pigeon flies into a pigeonhole, and the number of pigeons is greater than the number of pigeonholes, then there must be at least one pigeonhole containing more than 1 pigeon.

Apparently Dijkstra thought this version was too weak, and wrote “The undeserved status of the pigeon-hole principle” in 1991 where he states his preferred version:

for a nonempty, finite bag of real numbers, the maximum is at least the average, and the minimum is at most the average.

Note he did not say finite set of real numbers. Instead, by “bag” he means a multiset, or unordered list, a set where repeats are allowed (see: Why does mathematical convention deal so ineptly with multisets?)

But how does this version generalize the original? The idea is that we define the multiset \(S\) to be the “census” of pigeonboxes of each possible size. In the example pictured above, we would have \(S=\{2,1,1,1,1,1,1,1,1\}\), where the minimum is 1, the maximum is 2, and the average is 10/9. Dijkstra’s theorem correctly says \(1 \leq 10/9 \leq 2\).

Let’s now prove that Dijkstra’s principle is indeed stronger, i.e. that it implies the original principle. First let’s clearly state each.

Pigeonhole principle version 1: Let \(A\) and \(B\) be finite sets, and let \(f:A \to B\) be a function, assigning each \(a \in A\) to \(f(a) \in B\). For each \(b \in B\), let \(n(b)\) be the number of elements of \(A\) that are assigned to \(b\). If \(|A|>|B|\) (\(A\) has more elements than \(B\)) then there must exist some \(b \in B\) such that \(n(b)>1\).

Pigeonhole principle version 2 (Dijkstra): Let \(S\) be a finite multiset of real numbers. Then \(\mathrm{minimum}(S) \leq \mathrm{average}(S) \leq \mathrm{maximum}(S)\).

Proof v2 ⇒ v1: Let \(f: A \to B\) be a function between finite sets and assume \(|A|>|B|\). We want to use Dijkstra’s principle to prove there exists \(b \in B\) such that \(n(b)>1\). Define the multiset \(S = \{ n(b) \mid b \in B\}\). Observe that the average of \(S\) is equal to the ratio of the sizes of the two sets:

\[ \mathrm{average}(S) = \frac{\sum_{b \in B} n(b)}{|B|} = \frac{|A|}{|B|} \]

Since we assumed \(|A| > |B|\), this ratio is greater than 1:

\[ \mathrm{average}(S) = \frac{|A|}{|B|} > 1 \]

Combining this with Dijkstra’s principle, \[ \mathrm{maximum}(S) \geq \mathrm{average}(S) > 1 \] Therefore \[ \mathrm{maximum}(S) > 1 \] This means \(S\) contains a number larger than 1, which corresponds to an element \(b\) with \(n(b)>1\), so that completes the proof!

Multicellularity

Wednesday March 20 2024

Humans are made of cells, and societies are made of humans. Are we, like, cells in a “social organism”?

Some references to find out include:

The major transitions in evolution (1995) by John Maynard Smith (author of Evolution and the Theory of Games (1982)) and Szathmáry.

Major evolutionary transitions in individuality (2015) gives some necessary conditions: “cooperation, division of labor, communication, mutual dependence, and negligible within-group conflict”.

Ecological scaffolding and the evolution of individuality (2020) by Rainer, Bourrat et al. Includes a mathematical model.

The information theory of individuality (2020) by Krakauer, Flack, et al.

Some other keywords include: group agents, collective agency, enactive theory of agency, sense-making, bio-semiosis, ontologies of organismic worlds

Why does gimbal lock happen?

Wednesday March 13 2024

Quick, explain gimbal lock! 🌐

When you represent rotations by 3 numbers, the Euler angles, this corresponds to a map \(f:T^3 \to RP^3\) from the 3-dimensional torus to the 3-dimensional real projective space, regarded as differentiable manifolds. It can be proven that any such map cannot be a covering map ands a result at some points of the domain the map has a rank of 2, rather than 3. Those are the points where the gimbal lock occurs.

Vakil’s easy exercise

Wednesday March 13 2024

Here is “easy exercise” #11.1.A from Vakil about the spectrum of a commutative ring:

Show that \(\mathrm{dim} \, \mathrm{Spec} \, R = \dim \, R\)

Um… I at least think that the left side refers to the topological dimension and the right refers to the Krull dimension.

Reversible subsystem

Wednesday March 13 2024

Let \(X\) be a set and \(f:\mathbb{N}\times X \to X\) be a monoid action of the natural numbers on \(X\), an irreversible discrete-time dynamical system. Let \(P = \{ x \in X \mid \exists n\neq 0, f(n,x)=x \}\) be the set of periodic points, and let \(p:P \to \mathbb{N} - \{ 0 \}\) be a function assigning each points to its period. Then we can extend the monoid action \(f\) restricted to \(P\) to a group action \(g:\mathbb{Z} \times P \to P\) via

\[g(t, x) := f(t\bmod p(x),x)\]

This seems to be the “maximal reversible subsystem” (reference request) because the following diagram commutes, where \(i:\mathbb{N} \to \mathbb{Z}\) and \(j:P \to X\) are inclusions:

ℕ × j

ℕ × P ————————▶ ℕ × X

│ │

i × P │ │ f

▼ ▼

ℤ × P ——▶ P ——▶ X

g jParticle collision in cellular automata

Tuesday March 12 2024

Cellular automata are cool! They exhibit lots of emergent phenomenon like “particles” aka “gliders”. A particle is roughly defined as any finite configuration of cells that repeats after some fixed number of steps, displaced by some amount. That is, each particle has a period \(p\) (a natural number) and a displacement vector \(d\) which, for cellular automata on an integer grid, will be an integer vector. The “velocity” of the particle is then given by \(d/p\), a real-valued vector.

For extensive reading on this topic check out Solitons and particles in cellular automata: a bibliography by Steiglitz (1998).

What’s even more interesting is these particles can interact via collision and create new particles! For example the people on Game of Life wiki categorized all 71 possible glider collisions. Particle collision also played an important role in Matthew Cook’s proof that Rule 110 is a universal Turing machine. Here are all the particles in Rule 110:

Here is an interesting theorem about particle collision proved in Soliton-like behavior in automata by Park, Steiglitz, and Thurston (1986): suppose you have a cellular automata on the 1-dimensional integer lattice (such as the elementary cellular automata) and two particles. Suppose the first particle has a period of \(p_1\) and a displacement of \(d_1\) cells, and the second has period \(p_2\) and displacement \(d_2\). Assume \(\frac{d_1}{p_1} \neq \frac{d_2}{p_2}\) (they have different velocities) since otherwise they can never collide. Then the maximum number of possible outcomes of their collision is \(p_1 \cdot p_2 \cdot (d_2-d_1)\).

This theorem is generalized to higher dimensions in Upper bound on the products of particle interactions in cellular automata by Hordijk, Shalizi, Crutchfield (2000).

To formally define a particle in a cellular automaton, first need to define cellular automata. A cellular automata consists of

- a set \(X\) of “points” or “cells” equipped with a transitive group action (the translation group) such as \(X=\mathbb{Z}^D\), the \(D\)-dimensional integer lattice,

- a set \(Y\) whose elements are called cell states or local states,

- a function \(F: (X \to Y) \to (X \to Y)\), where each function \(u:X \to Y\) is called field configurations or global states or something, and \(F\) must obey…

- \(F\) is translation-equivariant

- this means \(FT[u]=TF[u]\) for every \(u:X \to Y\) and every translation \(T\).

- \(F\) is a local rule, meaning \(F[u](x)\) only depends on \(u\) in some neighborhood \(N_x \subseteq X\) of \(x\).

- assuming translation equivariance we could equivalently define some universal neighborhood \(N\) as a subset of the translation group so that \(N_x=N+x\).

- by the Curtis–Hedlund–Lyndon theorem you could replace this with the assumption \(F\) is continuous in the so-called “prodiscrete topology” on \(U\).

In order to define a particle-like solution, it needs to be “localized” in some way, and so you need to choose some background state. A particle with respect to a background \(0_Y \in Y\) is then a configuration \(u\) such that

- \(\{ x \in X \mid u(x) \neq 0_Y \}\) is a finite set

- there exists \(p \geq 1\) and a displacement \(d \in \mathbb{Z}^D\) such that such that \(F^p[u] = T^d[u]\), where \(T^d\) denotes translation action of \(d\),

- possibly some secret third property that says it can’t be decomposed into smaller disjoint particles

If \(u\) is finite and \(F\) is a local rule then \(F[u]\) is also finite, so finiteness is a forward time-invariant property. The second condition says after \(p\) steps the spot returns to its original configuration with some displacement. If \(d\) is the corresponding displacement element then the spot moves with average velocity \(v = \frac{d}{p}\).

Wall collision. In one dimension, when \(X = \mathbb{Z}\), another type of localized solution are traveling fronts which connect two different equilibria. These are only localized in one-dimension; in general they form a boundary between two regions of space and are \((D-1)\)-dimensional. In physics they are called domain walls. Let’s also call them walls: define a (1D periodic traveling) wall to be a configuration \(u: \mathbb{Z}^1 \to Y\) such that

there exists \(p \in \mathbb{N}\) and \(d \in X\) such that \(F^p[u] = T^d[u]\).

there exist points \(x_L,x_R \in \mathbb{Z}\) such that \(u\) is constant on \((-\infty,x_L]\) and \([x_R,\infty)\).

Returning to the Rule 184 example, it said on Wikipedia he classified all the collisions, but I don’t actually see that, so let’s do it ourselves. The previous theorem seems to suggest an upper bound of 8 or 16 outcomes depending on the interactants, which is clearly an overestimate because the interactions all have unique outcomes - let’s have a look! Mind the notation: 0s and 1s denote cells that are in the body of a spot while . and , denote background 0s and 1s. Just based on the signs of the velocities alone we can determine there are 5 possible collisions:

- \(\gamma^+ \otimes \gamma^- \to *\), annihilation to the alternating background:

00,.,.,.11

,00,.,.11.

.,00,.11.,

,.,0011.,.

.,.,.,.,.,

,.,.,.,.,.

.,.,.,.,.,- \(\gamma^+ \otimes \alpha^- \to \alpha^-\)

,00,.,.,01

.,00,.,01,

..,00,01,,

.,.,011,,,

,.,01,,,,,

.,01,,,,,,

,01,,,,,,,- \(\omega^+ \otimes \gamma^- \to \omega^+\) mirrors the previous case

01.,.,.11.

.01.,.11.,

..01.11.,.

...011.,.,

.....01.,.

......01.,

.......01.- \(\alpha^+ \otimes \beta \to \alpha^-\)

10...01,,

.10..01,,

,.10.01,,

.,.1001,,

,.,.10,,,

.,.10,,,,

,.10,,,,,

.10,,,,,,- \(\beta \otimes \omega^- \to \omega^+\) mirrors previous case

..01,,,10

..01,,10,

..01,10,.

..0110,.,

..010,.,.

...01.,.,

....01.,.

.....01.,

......01.- \(\omega^+ \otimes \alpha^- \to \beta\)

01.,.,.,01

.01.,.,01,

..01.,01,,

...0101,,,

....01,,,,

....01,,,,

....01,,,,Returning to the \(D\)-dimensional system, note that even though the system is non-reversible, we can extend to a reversible system on spots. Let \(P \subseteq U\) be the subset of spot configurations and let \(p:P \to \mathbb{N}\) assign each spot its period. Then we can extend \(F^t\) to an action on \(P\) for all \(t \in \mathbb{Z}\) via

\[ F^t u_t = F^r T_d^n [u], \quad t = np + r, \quad 0 \leq r < n \]

where \(t = np + r\) is the Euclidean division of \(t\) by \(p\).

Next, given two states \(u_1,u_2 \in U\), define the following commutative binary operation.

\[ (u_1 \oplus u_1)(x) = \begin{cases} u_1(x) & u_2(x) = 0_Y \\ u_2(x) & u_1(x) = 0_Y \\ 0_Y & u_1(x) \neq 0_Y \text{ and } u_2(x) \neq 0_Y \end{cases} \]

Let \(u_1\) and \(u_2\) be two particles with period \(p_i\) and displacement \(d_i\) for \(i=1,2\) and let \(v_i=\frac{d_i}{p_i}\). I don’t know how to prove it, but if \(v_1 \neq v_2\), then there exists \(t_0 \in \mathbb{Z}\) such that \[t \leq t_0 \implies F^t[u_1 \oplus u_2] = F^t[u_1] \oplus F^t[u_2]\] This defines a sequence of states \(u_t\) which is a \(\oplus\) of two incoming particles as \(t \to -\infty\) and which is unconstrained as \(t \to +\infty\).

Reading:

- Ceccherini-Silberstein, Coornaert (2010). Cellular automata and groups

- Pivato (2007). Defect particle kinematics in one-dimensional cellular automata

- Cook (2004). Universality in elementary cellular automata

- Aaronson (2002). Book review: “New Kind of Science”"

- Hordijk, Shalizi, Crutchfield (2000). Upper bound on the products of particle interactions in cellular automata

- Steiglitz (1998) . Solitons and particles in cellular automata: a bibliography

- Das, Mitchell, Crutchfield (1994). A genetic algorithm discovers particle-based computation in cellular automata

- Park, Steiglitz, Thurston (1986). Soliton-like behavior in automata

PS: Reflecting on an earlier post my definition of moving spot was not quite correct, because it doesn’t account for the important case of periodic background. So, how do account for this? A background state is one that satisfies \(F^t[u]=u\) for some \(t\) and \(T^d[u] = u\) for some \(d\). Thus it is periodic in both space and time. If \(u_1\) and \(u_2\) are two background states, then a wall (or wave) connecting them should perhaps be a function \(w\) such that there exist \(x_L\) and \(x_R\) such that \(u_1(x)=w(x)\) for all \(x<x_L\) and \(w(x)=u_2(x)\) for all \(x>x_R\). The set \([x_L,x_R]\) will be called the support of \(w\). Without loss of generality assume \(x_L\) is maximal and \(x_R\) is minimal. Notice if the support of \(w\) is finite and \(F\) is local then so is the support of \(w\). Finally, we can define a moving wall to be such a \(w\) along with \(p\) and \(d\) such that \(F^p[w]=T^d[w]\). More generally you could define a domain wall like this I guess… \(u_1\) and \(u_2\) are two global patterns, \(A_1\) and \(A_2\) are two subsets of space where the state matches \(u_1\) and \(u_2\) respectively, and \((A_1 \cup A_2)^C\) is the domain wall. The problem is how to define interactions in this case? And prove anything about them?

Solipsistic theorem

Thursday March 7 2024

Recently my friend asked my thoughts on dualism vs monism. I had to look up what is the difference. But it made me start thinking about Mealy machines, a mathematical formalization of systems with input, output, and state (memory) - like a computer! They are an even simpler version of the von Neumann architecture. Formally a Mealy machine consists of:

- a set of possible states, \(S\)

- a set of possible inputs, \(I\)

- a set of possible outputs, \(O\)

- a function \(f: S \times I \to S \times O\) called the “update” function which takes the current state value and an input value and yields the next state value and an output value. Here \(\times\) is the Cartesian product of sets.

Given an initial state and a sequence of inputs, a Mealy machine can run autonomously and produce a sequence of outputs. Specifically, given an initial state \(s_0\) and a sequence \(i_0,i_1,i_2,...\) of inputs, the Mealy machine determines a sequence \(s_1,s_2,s_3,\cdots\) of states and \(o_1,o_2,o_3,...\) of outputs using the update function, \((s_{t+1},o_{t+1}) = f(s_t,i_t)\) for each \(t=0,1,2,...\).

Animation based on this blog post.

In “mind-body dualism” I imagine there are two Mealy machines, hooked up to one another, where one machine is the world, including the body, and other is the mind. We observe our senses, process thoughts, take action. Likewise the world “observes” our action, processes it via physics, and “acts” on us by sending us a new observation in an endless cycle… 🌹🪰😊

Since there is a symmetry between the agent and environment, with the output of one being the input of the other and vice versa, I will replace the words “input” and “output” with “interface”, and always use capital letter I. So a “dualistic system” consists of

- four sets: \(S_1,S_2,I_1,I_2\)

- two functions:

- \(f_1: S_1 \times I_1 \to S_1 \times I_2\)

- \(f_2: S_2 \times I_2 \to S_2 \times I_1\)

Thus, \(S_1\) and \(S_2\) are the state sets of the two machines, and \(I_1\) and \(I_2\) are their “interfaces” (input/output and output/input respectively). This is not unlike a partially observed Markov decision process which are commonly used in reinforcement learning.

Next consider a system with multiple agents: suppose there are \(n\) total. Then we have

- sets \(S_1, S_2^1, ..., S_2^n\) of agent states,

- sets \(I_1^1, ..., I_1^n, I_2^1, ..., I_2^n\) of interfaces,

- functions:

- \(f_1: S_1 \times \prod_i I_1^i \to S_1 \times \prod_i I_2^i\)

- \(f_2^i: S_2^i \times I_2^i \to S_2^i \times I_1^i\) for each \(i=1,...,n\)

This is like having one central Mealy machine, the environment, hooked up to a bunch of other Mealy machines, the agents. Similar concepts found in multi-agent reinforcement learning. When \(n=1\), it’s exactly the same as a dualistic system.

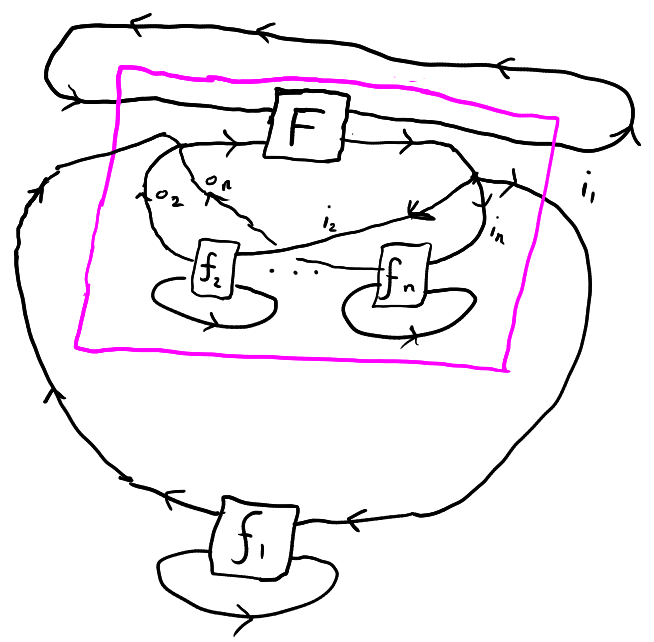

Theorem (informal): any agent in a multi-agent system can view themselves as part of a dualistic system, where all the other agents are part of the environment. See the figure below, where the pink box designates the enlarged environment containing every agent except \(j\), where \(j=1\).

Theorem (more formal): choose an agent \(j\). Then we can turn the multi agent system into a dual system where \(S_1 = S_1 \times \prod_{i \neq j} (S_2^i \times I_1^i \times I_2^i)\), \(S_2 = S_2^j\), \(I_1 = I_1^j\), \(I_2 = I_2^j\), and \(f_2 = f_2^j\). As for \(f_1\), it can be described in psuedo-psuedocode as follows:

- it takes \((s_1, s_2^1, i_1^1,i_2^1, ..., s_2^{j-1}, i_1^{j-1},i_2^{j-1},s_2^{j+1},i_1^{j+1},i_2^{j+1}, ..., s_2^n,i_1^n,i_2^n)\) and \(i_1^j\) as input

- first it combines \(i_1^j\) with all the other \(i_1\)’s along with \(s_1\) and computes \(f_1\), yielding the new \(s_1\) value along with new \(i_2\) values for all the \(n-1\) agents aside from \(j\).

- each agent \(i\neq j\) is given its new \(i_2\) value, combined with its current state \(s_2\), to produce the new \(s_2\) value and the next \(i_1\).

- thus all the state values have been updated, and it sends the output \(i_2^j\) back to agent \(j\).

Todo. Is there any mention of this concept in literature? It sounds like something Douglas Hofstadter](https://en.wikipedia.org/wiki/I_Am_a_Strange_Loop) would say. It reminds me of Cartesian framing. You could express using the language of the polynomial functors book by Spivak as in Example 4.60. But they use Moore machines instead of Mealy machines, which in their language is a lens \(Sy^S \to Oy^I\), so I would have to redo all the animations.

Short tour of dynamical systems

Tuesday March 5 2024

If I had to give a short tour of the mathematical field of dynamical systems, given that I am totally unqualified to do so, what would I say?

It is a very general concept: a dynamical system has a state that changes over time and that’s about it. dynamical systems are everywhere, a swinging pendulum, the planets in the solar system obeying Newton’s laws, indeed the whole universe itself and its physics (with some caveats). But what is state, and what is time? The simplest answer is to let \(X\) be a set of possible states, and let \(f:X \to X\) be a function from \(X\) to itself. Given an initial point \(x_0 : X\), we can generate the sequence \(x_1,x_2,x_3,\cdots\) by applying \(f\) repeatedly, where \(t=0,1,2,3,\cdots\) becomes the time variable. This is the world of discrete dynamical systems, aka iterated maps or recurrence relations, examples including the Fibonacci sequence, Collatz map, and logistic map. One studies fixed points, stable points, periodic points, attractors, period-doubling bifurcation, chaos, bifurcation digrams, the Feiegenbaum constants, bifurcation theory, arithmetic dynamics, complex dynamics like the Mandelbrot set, Sharkovskii’s theorem, Peixoto theorem.

Corollary of Sharkovskii’s theorem: Let \(I \subseteq \mathbb{R}\) be an interval and let \(f:I \to I\) be a continuous function. - if \(f\) has only finitely many periodic points, then they must all have periods that are powers of two - if there is a periodic point of period three, then there are periodic points of all other periods

Usually all that comes much later, after a more practical course in differential equations. In this context, the object under study may not even be a dynamical system by the standard definition, because we first need to show that solutions exist. For that we have Picard’s theorem. Then we can solve linear equations, other special methods, limit cycles, homoclinic and heteroclinic orbits, phase portraits, invariant manifolds, hyperbolic points, (Lyapunov) stability, the Hartman-Grobman theorem.

What is the difference between a differential equation and a dynamical system?

If you allow time to be either discrete or continuous, you might let \(X\) be a set, \(T\) be a monoid (or a group but I prefer monoid, since you don’t have to assume the system is reverseible). Then \(\phi:T \times X \to X\) is a monoid action of \(T\) on \(X\). Some say \(T\) is an arbitrary monoid, but the Handbook of Dynamical Systems requires \(T \subseteq \mathbb{R}\), that is, \(T\) must be a subset (sub-monoid) of the real numbers. If that seems arbitrary recall that \(\mathbb{R}\) is the “unique complete ordered field”. Then you can put additional structure on the state set like giving it a measure, then you get measure-preserving dynamical systems, topological conjugacy and structural stability, ergodic systems and the ergodic theorems, Poincare map and the Poincare recurrence theorem, I got bored and made this desmos animation for Poincare map, Hopf decomposition, Anosov flows, conservative and dissipative systems, symbolic dynamics, [cellular automata[(https://en.wikipedia.org/wiki/Cellular_automaton)], the Curtis–Hedlund–Lyndon theorem, Willems’ behavioral approach to systems theory.

Active research areas in no particular order: linear control, nonlinear control, dynamic programming, stochastic processes, random walks, stochastic PDEs, dynamical billiards seems pointless, integrable systems, PDEs, topological dynamics, nonlinear waves, fluid dynamics, holomorphic dynamics, dynamical zeta functions aka Ruelle zeta function, twist maps and Moser’s twist theorem (I assume they’re related), low-dimensional dynamics, Floer homology, renormalization group, traveling waves, algebraic dynamics, coupled oscillators, phase-locking, singular perturbation theory, conley index, blow-up phemenon, cocycles, cohomology, Oseledets theorem, blogpost by Terenace Tao, moduli space, Kupka-Smale theorem, Pugh’s closing lemma, Smale’s problems, Morse–Smale system, catastrophe theory, singularity theory, pattern formation, synchronization, applied category theory, Koopman theory, percolation, nonlocal interaction terms, higher order interaction, delay.

Open problems? Hilbert 16th problem is still open, Boltzmann–Sinai ergodic hypothesis was resolved recently TODO more here. Also todo more HISTORY.

More sections: PDE

Dynamical systems tour

Tuesday March 5 2024

How would I give a tour of the mathematical field of dynamical systems given that I am totally unqualified to do so?

It is a very general concept: a dynamical system has a state that changes over time and that’s about it. dynamical systems are everywhere, a swinging pendulum, the planets in the solar system obeying Newton’s laws, indeed the whole universe itself and its physics (with some caveats). But what is state, and what is time? The simplest answer is to let \(X\) be a set of possible states, and let \(f:X \to X\) be a function from \(X\) to itself. Given an initial point \(x_0 : X\), we can generate the sequence \(x_1,x_2,x_3,\cdots\) by applying \(f\) repeatedly, where \(t=0,1,2,3,\cdots\) becomes the time variable. This is the world of discrete dynamical systems, aka iterated maps or recurrence relations, examples including the Fibonacci sequence, Collatz map, and logistic map. One studies fixed points, stable points, periodic points, attractors, period-doubling bifurcation, chaos, bifurcation digrams, the Feiegenbaum constants, bifurcation theory, arithmetic dynamics, complex dynamics like the Mandelbrot set, Sharkovskii’s theorem, Peixoto theorem.

Usually all that comes much later, after a more practical course in differential equations. In this context, the object under study may not even be a dynamical system by the standard definition, because we first need to show that solutions exist. For that we have Picard’s theorem. Then we can solve linear equations, other special methods, limit cycles, homoclinic and heteroclinic orbits, phase portraits, invariant manifolds, hyperbolic points, (Lyapunov) stability, the Hartman-Grobman theorem.

If you allow time to be either discrete or continuous, you might let \(X\) be a set, \(T\) be a monoid (or a group but I prefer monoid, since you don’t have to assume the system is reverseible). Then \(\phi:T \times X \to X\) is a monoid action of \(T\) on \(X\). Some say \(T\) is an arbitrary monoid, but the Handbook of Dynamical Systems requires \(T \subseteq \mathbb{R}\), that is, \(T\) must be a subset (sub-monoid) of the real numbers. If that seems arbitrary recall that \(\mathbb{R}\) is the “unique complete ordered field”. Then you can put additional structure on the state set like giving it a measure, then you get measure-preserving dynamical systems, topological conjugacy and structural stability, ergodic systems and the ergodic theorems, Poincare map and the Poincare recurrence theorem, I got bored and made this desmos animation for Poincare map, Hopf decomposition, Anosov flows, conservative and dissipative systems, symbolic dynamics, [cellular automata[(https://en.wikipedia.org/wiki/Cellular_automaton)], the Curtis–Hedlund–Lyndon theorem, Willems’ behavioral approach to systems theory.

Active research areas in no particular order: linear control, nonlinear control, dynamic programming, stochastic processes, random walks, stochastic PDEs, dynamical billiards seems pointless, integrable systems, PDEs, topological dynamics, nonlinear waves, fluid dynamics, holomorphic dynamics, dynamical zeta functions aka Ruelle zeta function, twist maps and Moser’s twist theorem (I assume they’re related), low-dimensional dynamics, Floer homology, renormalization group, traveling waves, algebraic dynamics, coupled oscillators, phase-locking, singular perturbation theory, conley index, blow-up phemenon, cocycles, cohomology, Oseledets theorem, blogpost by Terenace Tao, moduli space, Kupka-Smale theorem, Pugh’s closing lemma, Smale’s problems, Morse–Smale system, catastrophe theory, singularity theory, pattern formation, synchronization, applied category theory, Koopman theory, percolation, nonlocal interaction terms, higher order interaction, delay equations.

4 types of categories to know about

Thursday February 29 2024

If you wish to know more about category theory, then the following 4 types may become of importance to you:

Concrete categories are ones where, roughly speaking, the objects are sets with some additional structure. Many objects in mathematics, like fields, vector spaces, groups, rings, and topological spaces, can be represented this way. For a detailed list of examples check out Example 1.1.3 in Category Theory in Context (free pdf). For example, if X is a topological space, then X would be an object in the category of topological spaces. Now, every topological space has an underlying set of points. This is represented by the forgetful functor from the category of topological spaces to the category of sets, sometimes denoted \(U:\mathrm{Top} \to \mathrm{Set}\). In that case \(U(X)\) would be the underlying set of points of \(X\).

Monoidal categories. Many categories have a way to combine two objects, like the Cartesian product \(A \times B\) of sets, the direct product of two groups, the tensor product of two vector spaces, etc, that constitute a binary operation. Furthermore in each case there is a unit object which serves as an identity for the binary operation: the singleton set (with one element), the trivial vector space (with one point), etc. This is the premise of a monoidal category. Insert segue into Rosetta stone paper here.

Cartesian closed categories. This type of category is commonly used to represent the type systems within some programming languages. Given two objects \(A\) and \(B\) in a “CCC”, you can form the product \(A \times B\) as well as the exponential \(B^A\), also written more suggestively as \(A \to B\). When \(A\) and \(B\) are sets (or types) then \(B^A\) can be understood as the set (type) of functions (or “programs”) from \(A\) to \(B\). If you combine this with point 2, so that the product \(\times\) obeys the monoidal laws, then you get a closed monoidal category. I need a better reference on this.

Abelian categories. If you ever try to study algebraic topology you will come across the concept of homology (or cohomology) and the notion of a chain complex. Let \(Ab\) be the category of abelian groups (which is a concrete category as well as a monoidal category). A chain complex of abelian groups is a sequence \(A_0, A_1, A_2, \cdots\) of abelian groups, along with homomorphisms \(d_n: A_{n+1} \to A_n\) for each \(n\), such that \(d_{n} \circ d_{n+1} = 0\) for all \(n\), where on the left is the composition of \(d_{n+1}\) with \(d_n\) and on the right is the zero morphism from \(A_{n+2}\) to \(A_n\), which sends every element to zero. If one asks, “what is the most general category I can replace \(Ab\) with?” the answer would be one where you have zero morphisms, and a few other things… anyway, people already figured this out, and they are called abelian categories!

Autoprovers > autoformalizers?

Wednesday February 28 2024

Autoformalization is a way to encode all the mathematical work that’s already been done by inputting the pdfs or latex files into a computer and asking it to parse out all the little details into a completely rigorous, type-checked proof.

But what if it’s easier to just redo all the work from scratch? This is the basic premise of automated theorem proving. Let’s go with the following dubious logic: if there was some amazing method to automatically prove theorems without advances in deep learning, then they would have already been found. Therefore it suffices to look at automated theorem proving in the context of neural theorem provers specifically.

Alright! Let’s first look at GPT-\(f\) from 2 people at OpenAI.

Here’s a list of some neural theorem provers:

- Stanislas Polu and Ilya Sutskever. Generative language modeling for automated theorem proving. arXiv preprint arXiv:2009.03393, 2020

Jesse Michael Han, Jason Rute, Yuhuai Wu, Edward W Ayers, and Stanislas Polu. Proof artifact co-training for theorem proving with language models. arXiv preprint arXiv:2102.06203, 2021. [18] Stanislas Polu, Jesse Michael Han, Kunhao Zheng, Mantas Baksys, Igor Babuschkin, and Ilya Sutskever. Formal mathematics statement curriculum learning. arXiv preprint arXiv:2202.01344, 2022. [19] Daniel Whalen. Holophrasm: a neural automated theorem prover for higher-order logic. arXiv preprint arXiv:1608.02644, 2016

I think about the success of the AlphaZero, the machine learning algorithm which became superhuman at chess, Go and other games via self-play using Monte-Carlo tree search (MCTS). A lot of methods I see now use existing training data, like existing math libraries, databases or forums. But don’t we need a way to automatically generately data like in self-play? But there’s no self-play in mathematics, aside from excessive self-admiration. I need to read more but this paper, Hypertree proof search for neural theorem proving (2022), was inspired by AlphaZero. They say this:

Theorem proving can be thought of as computing game-theoretic value for positions in a min/max tree: for a goal to be proven, we need one move (max) that leads to subgoals that are all proven (min). Noticing heterogenity in the arities of min or max nodes, we propose a search method that goes down simultaneously in all children of min nodes, such that every simulation could potentially result in a full proof-tree.

Discrete-time birth-death process from dynamical system

Monday February 26 2024

A birth-death process is a (continuous-time) Markov chain where the state-space is \(N=\{0,1,2,\cdots\}\) and where for every \(n\) there is a transition to \(n+1\) (birth) and a transition to \(n-1\) (death), except \(n=0\) which can only transition to \(n=1\).

Each of these transitions has a rate assigned, \(\alpha_n\) for the birth rate from \(n\) to \(n+1\) and \(\beta_n\) for the death rate from \(n+1\) to \(n\). For example, if \(n\) was the number of individuals with an infectious disease in a closed system, then \(\alpha_0=0\) because at least 1 individual is needed to spread, making \(n=0\) an absorbing state.

You can convert this to a discrete-time Markov chain by defining \[ p_n = \frac{\alpha_n}{\alpha_n+\beta_{n-1}} \] and \[ q_n = \frac{\beta_n}{\alpha_{n+1}+\beta_n} \] assuming \(\alpha_n+\beta_{n+1}\neq0\) for all \(n\). In this model, \(p_n\) is the probability of transitioning from \(n\) to \(n+1\) and \(q_n\) is the probability of transitioning from \(n+1\) to \(n\).

What if you have a dynamical system on a state space \(X\) and a map \(f:X \to \mathbb{N}\) assigning each state to a natural number? Can you reconstruct a birth-death process from this? It appears so, here’s how:

For each \(n \in N\) let \(X_n\) be the preimage of \(n\). We should assume \(X_n\) only borders \(X_{n-1}\) and \(X_{n+1}\), that is, \[ |m-n| \geq 2 \implies X_m \cap X_n = \emptyset, \quad \forall m,n \in N \]

Then, for each \(n\) and for each \(x_0 \in X_n\), the orbit \(x(t)\) starting from \(t=0\) at \(x_0\) can either 1) remain in \(X_n\) forever, 2) leave into \(X_{n-1}\), or 3) leave into \(X_{n+1}\). Thus partition \(X_n\) into \(A_n^+ \cup A_n \cup A_n^-\). (Assume \(A_0^-=\emptyset\).)

At this point, we also need a measure on \(X\). In fact let’s just assume we have a measure-preserving dynamical system so that we can measure (some) subsets \(S \subseteq X\) and get a real number \(\mu(S)\) between 0 and 1 in return, with \(\mu(X)=1\) and \(\mu(\emptyset)=0\).

If we assume for all \(n\) that \(A_n^+\) and \(A_n^-\) are measurable, and \(\mu(A_n^+ \cup A_n^-)\neq 0\), then we can define \[ p_n = \frac{\mu(A_n^+)}{\mu(A_n^+ \cup A_n^-)} \] and \[ q_n = \frac{\mu(A_n^-)}{\mu(A_n^+ \cup A_n^-)} \] for all \(n\). In other words, for a given \(n\), \(\alpha_n\) is the proportion of trajectories This seems to yield a valid discrete-time birth-death process. :)

TODO: shouldn’t this apply to continuous time too..? In that case i think you integrate the time-to-exit over \(A_n^\pm\).

Social constructs in biology

Thursday February 8 2024

Gender is a social construct means the roles, norms and behaviors we associate to biological sex (male/female) are not essential, they are agreed upon collaboratively over time (though not everyone may be involved in the collaboration) and enshrined as consensus reality. The easiest way to “prove” this is to observe that gender norms differ between locations and time periods.

That’s all fine- gender identity is also a social construct, and transgender people can be “defined” as ones whose identity does not correspond to their sex.

But there’s another problem: biological sex is also a social construct. This is where most rightoids start screeching.

The simplest way to see this is maybe to read about sex verification in the Olympics, I included some references in the appendix. Hint: it’s not good!

The logic of sex as a social construct is just about as simple: sex (male/female) is a model or framework that we use to understand our observations, and to build an understanding of the world. This model is useful because it has genuine predictive power in many contexts, that is, the contexts which fall into the model’s assumptions. But the existence of intersex and chromosome-variant people clearly show the model will fail in other cases, i.e. will not provide accurate descriptions/predictions of reality.

It’s like the quote by George Box:

All models are approximations. Assumptions, whether implied or clearly stated, are never exactly true. All models are wrong, but some models are useful. So the question you need to ask is not “Is the model true?” (it never is) but “Is the model good enough for this particular application?”

Models of binary sex and binary gender aid our communication and actions in some contexts, and not in others. In the edge cases where the coarse-grained binary models break down, we shouldn’t hesitate to abandon them in favor of a more fine-grained model of sex and gender diverity. That doesn’t however discout the utility of the binary model in some circumstances, for example when debating the GOP for the right to choice. In that case, in my opinion, it’s worth sticking to the coarse grained model, because there’s no benefit or additional clarity added by using the fine-grained one.

references

- wikipedia: sex verification in sports

- The Humiliating Practice of Sex-Testing Female Athletes by Ruth Padawer (2016)

- Personal Account: A woman tried and tested by María José Martínez-Patiño (2005)

appendix

From The Humiliating Practice of Sex-Testing Female Athletes by Ruth Padawer (2016):

In 1952, the Soviet Union joined the Olympics, stunning the world with the success and brawn of its female athletes. That year, women accounted for 23 of the Soviet Union’s 71 medals, compared with eight of America’s 76 medals. As the Olympics became another front in the Cold War, rumors spread in the 1960s that Eastern-bloc female athletes were men who bound their genitals to rake in more wins.